A Brief History of AI in Canada.

To learn about the history of AI, and a get a glimpse into the future try clicking on a circle in the spiral or use the arrow indicators to move the timeline.

-

1950

The Dawn of AI: The Imitation Game

Read MoreTuring's seminal paper, "Computing Machinery and Intelligence", published in 1950 suggests the potential of artificial intelligence.

-

1956

The Dartmouth Conference

Read MoreConsidered by many to be the seminal event for artificial intelligence as a field of research, the Dartmouth Summer Research Project on Artificial Intelligence was proposed by John McCarthy, the "Father of AI" and originator of the term 'artificial intelligence', along with researchers Martin Minsky (Fellow of Mathematics, Harvard University), Nathaniel Rochester (Manager of Information Research, IBM Technologies), and Claude Shannon (Mathematician, Bell Telephone Labratories). The aim of the summer study was to creatively build upon Alan Turing's initial conjecture that any feature of human thinking or intelligence, with enough description, can be simulated by a machine.

-

1965

Herbert Simon Prediction

Herbert Simon, AI pioneer and creator of the Logic Theory Machine, predicted in 1965 that by 1985, a machine would be capable of doing anything a human was able to do, specifically when it came to the game of chess. In reality, this milestone would come roughly 30 years later.

-

1966

Shakey the Robot Debuts

Read MoreShakey the Robot, named after its wobbly gait, was born out of Stanford's Artificial Intelligence Center and was the first mobile robot with the ability to perceive and reason about its surroundings. Performing tasks that required planning, route-finding, and the rearranging of simple objects, the robot greatly influenced modern robotics and AI techniques.

-

1971

DARPA Speech Understanding Program

Speech recognition made major strides in the 1970's, largely due to interest and funding from the United States' Department of Defense. The DoD's DARPA Speech Understanding Program ran from 1971-1976, and was ran by a group of researchers who aimed to produce a system that, if successful, would “Accept continuous speech from many cooperative speakers of 'the American dialect' over a good quality microphone, using a select vocabulary of 1,000 words, with less than 10% error.” Among other accomplishments, this program led to Carnegie Mellon's HARPY system, able to understand 1011 words -- approximately the vocabulary of an average three year old.

-

1974

AI Winter I: The Rise of Expert Systems

Despite dormant funds and a sudden lack of interest in AI research by the wider public, academics remained diligent in their work throughout the first "AI Winter" of the early 1980's. Their research led to the rise of expert systems, many of which would provide the foundation for more formative AI discoveries. For example one expert system, MYCIN, was the first backward-chaining system that allowed AI to recognize the bacteria causing infections and recommend antibiotics. Though never used it practice, it recommended the proper therapy in roughly 69% of cases, which was far better than infectious disease experts at the time.

-

1983

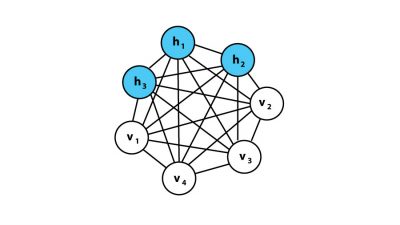

The Boltzmann Machine

Read MoreGeoffrey Hinton & Terry Sejnowski invent the Restricted Boltzmann machine (RBM), using the back-propagation algorithm that would seed their work on neural networks. RBM's are neural networks that facilitate deep learning across a variety of use cases including voice recognition, recommendation engines, fraud detection, sentiment analysis, to name a few.

-

1990s

AI Winter II

Read MoreBy the early 90s, the earliest successful expert systems, such as XCON, proved too expensive to maintain. They were difficult to update, they could not learn and they fell prey to problems that had been identified years earlier in research in nonmonotonic logic. By 1991, the impressive list of goals penned in 1981 had not been met. As with other AI projects, expectations had run much higher than what was actually possible.

-

1997

IBM's Deep Blue sets Chess record

Initially the research project of two Carnegie Mellon students, Deep Blue got its name when the students were hired to work at IBM research in 1989. DeepBlue was able to explore up to 200 million possible chess positions per second. The computer went up against human chess champion Gary Kasparov in 1997, where it won in a six-game match that lasted several days: two wins for the computer, one for the champion, and three draws. This computing feat opened developers' eyes to the possibilities of AI when applied to other complex problems. Architecture used in Deep Blue was later applied to financial modeling, data mining, and molecular dynamics with positive results. This chess challenge also paved the way for more recent challenges at IBM, like building a computer that could beat a human at Jeopardy!

-

2002

University of Alberta launches Alberta Innovates Centre for Machine Learning (AICML)

Read MoreFounded to promote research in AI and Machine Learning in Alberta, AICML is advised by and home to a world-leading expert in reinforcement learning, Dr. Richard Sutton. Later rebranded to Amii, they would make major strides in AI when they would officially solve checkers in 2007.

-

2004

CIFAR supports Deep Learning despite its unpopularity

Read MoreIn 2004, CIFAR launched the 'Neural Computation and Adaptive Perception' program under the direction of Geoffrey Hinton. The program aimed to unlock the mystery of how our brains convert sensory stimuli into information and to recreate human-style learning in computers. The program was later rebooted in 2012 as 'Learning in Machines and Brains' and is now co-directed by Yoshua Bengio and Yann LeCun.

-

2006

Deep Learning's Breakthrough Moment

Read MoreWhile soldiering on through the AI winter and aided partially by minimal CIFAR funding, Geoffrey Hinton and his colleague Simon Osindero developed a more efficient way to teach individual layers of neurons. Their breakthrough paper, "A fast learning algorithm for deep belief nets" helped to rebrand "neural nets" as "deep learning" and draw significant attention to the sub-field of machine learning.

-

2007

Amii (formerly AICML) Researchers Solve Checkers

Read MoreChinook, a checkers-playing program programmed by Jonathan Schaeffer at the University of Alberta, working out of Amii, was the first in the world to officially solve the game of checkers and beat human champion, Marion Tinsley.

-

2010

Acoustic Modeling: The Killer App

Read MoreU of T researchers Navdeep Jaitly, Abdel-Rahman Mohamed, George Dahl along with Geoffrey Hinton published a paper marking their breakthrough in speech recognition technology using feed-forward networks. Predicated on backpropagation technology first developed in the 80’s, this model was integrated into Google's speech recognition technology and became a part of all other major industry speech recognition systems.

-

2011

IBM's Watson wins Jeopardy!

Read MoreIBM's Watson was born out of another one of IBM's Grand Challenges. After Deep Blue beat Gary Kasparov in chess, IBM decided to build a program capable of answering questions posed in natural language and designed for the specific task of competing on the popular game show Jeopardy! Developed in IBM's DeepQA project led by David Ferrucci, Watson went up against Jeopardy human champions Brad Rutter and Ken Jennings winning with a final score of $77,147, with Jennings in second place at $24,000 and Rutter in third with $21,600.

-

2012

U of T researchers make historic strides in computer vision with AlexNet

Read MoreAlex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton created a “large, deep convolutional neural network” (CNN) that was used to win the 2012 ILSVRC (ImageNet Large-Scale Visual Recognition Challenge), called AlexNet. This challenge, widely recognized as the annual Olympics for computer vision, pits teams against one another to develop the best computer vision model for tasks such as image detection, localization, classification, and more. AlexNet marked a pivotal moment in computer vision history; the model was the first to perform at such a high level on the historically difficult ImageNet database with an error rate of just 15.4%. AlexNet's performance was the debut of CNN's to the computer vision community, demonstrating what was possible and paving the way for similar techniques used today.

-

2016

Alpha Go: Computer v. Lee Sedol

Read MoreGoogle's DeepMind Challenge Match, often compared to the IBM's 1997 Grand Challenge in chess, pitted DeepMind's AlphaGo system against world champion Lee Sedol in the notoriously difficult 3,000 year old Chinese game of Go. The 4-1 victory earned AlphaGo a 9 dan professional ranking, the highest ever received by a human or computer player.

-

2016

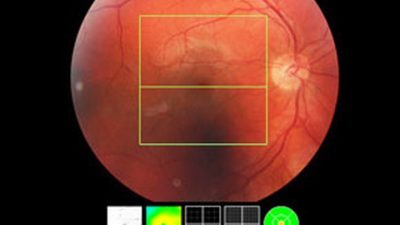

AI Retinal Imaging surpasses that of doctors

Read MoreThe health division of Google's DeepMind collaborated with leading health organizations to apply the similar deep learning technology used for image recognition to retinal scanning. In 2016, the model was able to detect indicators of blindness and other retinal diseases with the same or improved accuracy levels as a human doctor.

-

2016

Element AI Launches

Read MoreElement AI, founded by AI heavyweight Yoshua Bengio and his colleagues Jean-Francois Gagne and Nicholas Chapados, is a research incubator based in Montreal, QC. Using an "AI-first" strategy applied to big business problems, Element provides AI-as-a-Service to integrate the latest AI research into existing processes.

-

2017

RBC Research Lab Launches with focus on reinforcement learning

Read MoreDr. Richard Sutton becomes the head academic advisor to RBC machine learning research. Opening labs in Edmonton and Toronto, RBC Research has the goal to work with Sutton and the team at Amii to bring reinforcement learning to the forefront of the financial services industry, as well as create a RL-centered research centre of excellence in Canada.

-

2017

Canadian Government Announces #Budget2017

Read MoreIn 2017, the Canadian government pledged $950 million towards developing superclusters and a pan-Canadian AI strategy to bring experts together.

-

2017

NextAI Launches

Read MoreNEXT Canada launches NextAI, an AI innovation hub for entrepreneurs and researchers looking to commercialize technology with global impact.

-

2017

Vector Institute Launches

Read MoreFollowing the Canadian goverment announcement of the 2017 Budget, the Vector Institute launched. Headquartered out of MaRS Discovery District in Toronto, and with AI pioneer Geoffrey Hinton as Chief Scientific Advisor, Vector aims to collaborate with academia and industry to help produce and train a critical mass of AI talent to support innovation clusters across Canada.

-

2017

Creative Destruction Lab introduces Quantum Machine Learning Initiative

Read MoreThe Creative Destruction Lab at the University of Toronto's Rotman School of Management launched the Quantum Machine Learning program, with the goal of producing more well-capitalized, revenue-generating QML companies by 2022 than the rest of the world combined.

-

Today

What's Next?